Riding the Token Wave: AI is Here and the Future is Brighter Than You Think

This post is adapted from my talk at Everything NYC / Sanity in January 2025. Watch the video.

All of us should have dozens of agents in our pocket running, working for us, right now.

That’s not a prediction - it’s a statement about what’s possible today. The question is: why don’t we? And more importantly, how do we get there?

I’ve spent a lot of my career thinking about how to make the intentions in our work more visible and automate the drudgery. At OpenAI, I worked on alignment research. Before that, I started and sold some companies, all focused on developer tooling, compilers, testing - that kind of thing. The common thread? Taking what’s in your head and making it real, with less friction.

But here’s the thing - what I’m about to describe isn’t really about coding. It’s about the universal interface that all humans have to use to understand a domain, communicate intent, and solve problems at scale. Coding just happens to be where I’ve spent most of my time.

The New Utility

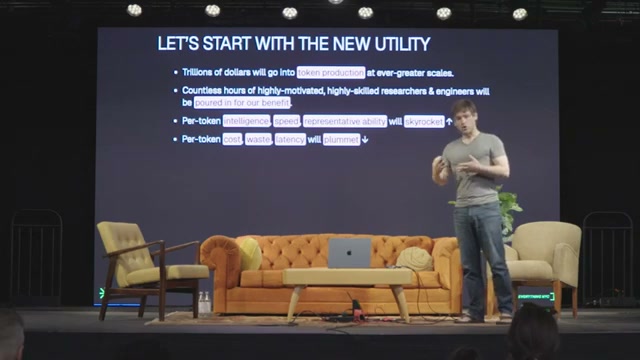

These numbers seem fanciful, but they’re real: in the next five to ten years, literally trillions of dollars will be invested in generating tokens at massive scale. And alongside those dollars, millions of hours from hugely capable, motivated researchers and engineers are going to be poured into this - for our benefit.

What does that mean practically? Per token, over the next couple of years:

- Intelligence will skyrocket

- Speed will increase dramatically

- The ability to represent things we care about will expand

- Cost, latency, and waste will plummet

The net effect is that there will literally be waves of tokens washing through data centers and across the internet. And just like any other wave - rivers with hydropower, steam, solar - we’re entering an age where we have an abundant source of cognitive power.

But here’s the challenge: how do you actually harness that to make things better? Can we turn tokens into a form of propulsion?

The Steam Engine Analogy

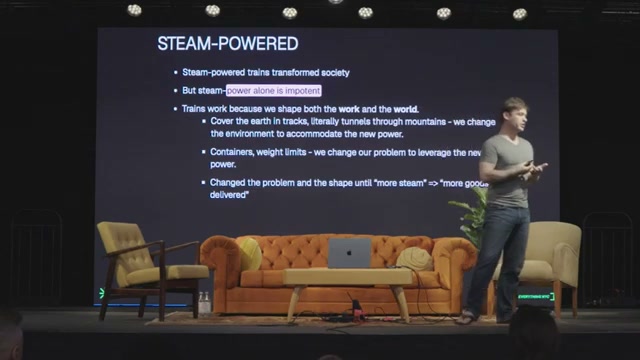

Given the venue (and because I think about this stuff constantly), here’s the analogy that keeps coming back to me: the steam engine.

Steam trains literally transformed society. But here’s the ironic part - steam power by itself is completely impotent. It can’t do anything on its own.

It only works because humanity literally changed the shape of the earth to accommodate it, and then changed their problems to fit into a train shape.

We covered the earth in tracks. We drilled holes through mountains. And then we changed all of our problems to fit into containers, adhering to weight restrictions and dimensions. We iterated on this so much that eventually, if you just added more steam, you got more goods or more people delivered.

With token-powered systems, we have to do the same thing. We have to build the infrastructure that allows token power to access our world. And then we have to figure out how to shape our problems in a way that can be pushed forward on this new infrastructure.

The nice thing is that the AI labs are all putting massive money into understanding what shared infrastructure is needed. They’re working hard to “unhobble” LLMs at scale.

That means your leverage is in understanding how to unhobble an LLM in your specific domain - so the LLM understands what you’re trying to do and can power the changes you want to see happen.

When Power Outruns Shape

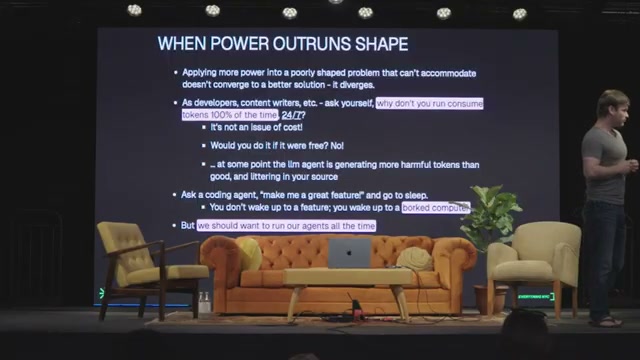

Here’s a question for you: if you’re a developer or content writer, why aren’t you running an LLM all the time, generating billions of tokens every day?

Does that sound like a good idea to anyone? Probably not.

You might think it’s a cost issue, but tokens are going to become basically free. The real issue is that even if it were free, you probably wouldn’t want to do this. It seems potentially destructive.

At some point, the LLM agent starts generating tokens that do more harm to your content or source code than good. If you ask a coding agent at night to “make me this feature,” describe what you want, and go to sleep - it’s very unlikely you wake up to a complete, nice surprise. More likely you wake up to a broken computer.

But we should aspire to run agents all the time. All of us should have dozens of agents in our pocket running, working for us right now.

The challenge is that we don’t know how to direct that power in a way we can trust at scale.

Your Role: Conductor

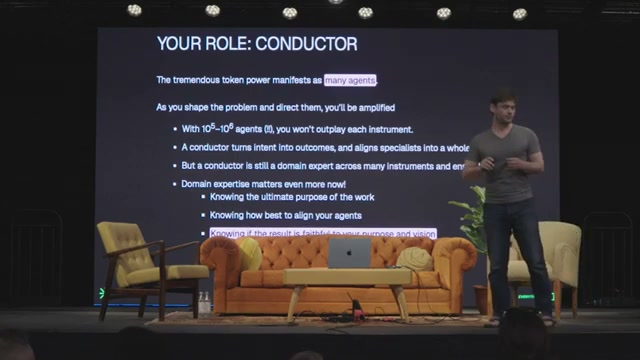

The way to harness this is to think of ourselves as conductors. Everyone else is providing tremendous amounts of virtual specialists and skilled laborers. At some point, all of us are going to have hundreds of thousands or millions of agents working for us individually.

These numbers seem insane. But if you were to take the number of transistors or the amount of memory we carelessly carry in our pockets back to 1960 or 1980, they wouldn’t have believed you either.

If we have this many agents working for us, we’re not going to be able to out-compete their sheer skill set and range of abilities. So it becomes our job to put together an ensemble and figure out how to express our intent, then convert that into an outcome we actually care about - powered by these specialists.

The thing about a conductor in an orchestra is they’re actually a master of dozens of instruments. And beyond that, they know how to put them together and make art.

In the same way, domain expertise is even more important in this world. You, as the expert who’s done tremendous work in your career - you know the purpose of this work. How will it be applied in the real world? What problems will it solve? You know how to arrange your team so they make forward progress. And at the end of the day, when the work comes in, you know how to review it and see if the result is faithful to your vision.

Taste and judgment matter tremendously. They just operate at a tremendously larger scale.

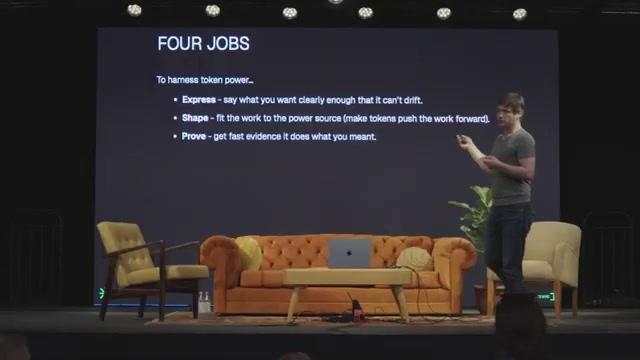

The Four Jobs

There are four things required to harness token power - and this is really general to any human problem:

-

Express - Say what you want clearly enough that it can’t drift. This is way more difficult than it sounds. If you’ve ever been given a product description you had to implement, you know that by the time you write it down, you’re missing half the information you need.

-

Shape - Fit the work to the power source. Make it so tokens push the work forward. You need to shape the problem so applying more tokens gives you monotonically increasing good results.

-

Prove - Get fast evidence it does what you meant. If you have hundreds of thousands of agents working on your behalf, they’re generating tremendous amounts of artifacts. You need some way to prove in the small that the work is faithful to your vision.

-

Scale - Once you can prove in that tight inner loop, you can literally turn up the amount of tokens you pour into the system and get better and better results at scale.

What This Looks Like in Practice

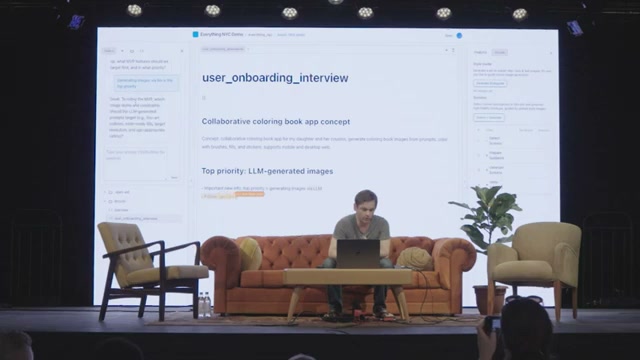

I’ve been building something that embodies these principles. Here’s how it works:

The first step is extracting from the user what they actually want to do. If you’ve ever prepared a talk or written a blog post, there’s often a step where you give it to an editor or debate with a friend. You have an interrogative partner who pulls highly clear thought from you.

Once you’ve captured that, you put it in a specification document - a living document containing decisions, implementation details, and everything else. When you send this off for someone (or something) to implement, the work needs to come back and relate to this document. You need to understand which part of the result proves the thing is implemented correctly.

The interface is simple: chat on the left (like we’re all familiar with), specification document in the middle, and introspection tools on the right.

I describe what I want to build - say, a collaborative coloring book app for my daughter. It should work on mobile, generate coloring book images via an LLM, and she can color them in with brushes, fills, and stickers (she loves stickers).

The system starts asking me questions, the same ones a partner would ask: what features should we target first, what are the priorities, what about image styles and constraints? It’s literally annoyed with me for not being specific enough - which is exactly what you want.

I can generate mood boards based on vague descriptions (“fun, bold, anime-inspired, warm”) and pin the one I like. Now all future generations will be driven by this style. I’m expressing my intent vaguely at first, then iteratively narrowing down what I want and require.

But the visual design is only part of it. The harder part is the rigor of thought.

Extracting Atomic Claims

If you’ve ever translated a product requirement into an actual application, you know code is much denser. You have to think about all the edge cases, contradictions, and ambiguities.

What we can do is walk over the specification document and extract out atomic, indivisible claims. What are you saying you want the world to look like when this application is built?

Then we can identify what’s ambiguous. For example, I said “kid friendly” but didn’t mention age range, content standards, or accessibility. That’s a perfectly good point. Maybe I don’t care in this case - it’s a minor app. But maybe it matters, and I should fix it.

Worse than ambiguity might be contradictions. I described an application that’s publicly accessible, has no accounts, and has some interaction. The system flags: “You’re allowing anonymous users to free draw and publish directly to public galleries. This is probably a bad idea.”

It’s extracting contradictions, ambiguities - things that would cause problems if I launched a million agents right now. We fix these problems immediately, when it’s fastest and freshest in mind.

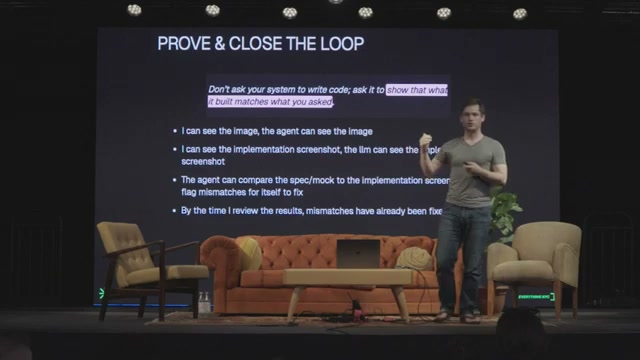

Prove and Close the Loop

The key insight is this: we don’t care about the code. We care about the properties we expect the code to exhibit. I don’t care how you formatted it. I want to make sure it’s safe, has the functionality, is secure, has the right performance.

There are high-level attributes I need to be convinced of, rather than reading the code. And it turns out English is a reasonably good programming language - it’s just missing a compiler, toolchain, and linter.

Once you have mockups in your specification, you’ve created a closed loop. The agent can see the mockups as they’re implementing. When they’re done, they can see what the implementation looks like. They can compare it to the spec and see if they messed up. If they did, they can flag that for their own subagents to fix.

You’ve turned this into a problem where you keep pouring more tokens into it, more compute, and it iterates more and more. By the time it gets to you, it’s almost certainly adhering to your specification.

Evidence Beats Vibes

Here’s the question I keep coming back to: what would it take for you to trust a 14 million line PR that touches something incredibly sensitive and business critical?

Right now, probably nothing could convince you. But at some point, you can imagine a system that’s able to prove to you that this massive change is safe, correct, and faithful to your intent.

The evidence is what convinces us. Not vibes - evidence.

We want to fix any problems way early in the process, as early as possible. If you have ambiguity and you launch a million agents and wake up in the morning, you’re likely to have a very bad time.

The Future is Brighter Than You Think

This isn’t about replacing developers or content creators. It’s about amplification. It’s about taking the things you’re already good at - domain expertise, taste, judgment, knowing what problems to solve - and scaling them up dramatically.

The people who will thrive in this world are the ones who can clearly express what they want, shape problems to be solved by tokens, prove that the solutions work, and scale up confidently.

All of us should have dozens of agents in our pocket running, working for us, right now. The infrastructure is being built. The only question is: are you ready to conduct?

References

- OpenAI - openai.com

- Sanity - sanity.io (host of Everything NYC conference)

- Model Spec - OpenAI Model Spec on GitHub (alignment specifications for AI models)

- Deliberative Alignment - OpenAI Research (technique for aligning models to specifications)

- Steam Engine History - Wikipedia: History of Rail Transport (infrastructure transformation analogy)

- “The New Code” - My AI Engineer talk on specifications as source code